"Distinctive Image Features from Scale-Invariant Keypoints," Lowe, IJCV, 2004

Paper author: David G. Lowe The following figures are all from this paper.

Novelties, contributions, assumption

This work introduce a set of promising affine invariant features, which can be used to identified specific object varied with background, illumination, rotation degree.

Questions and promising applications

Object Recognition by keypoints matching.

Technical Summarization

Scale Invariant Feature Transform (SIFT) contains two main part: finding keypoints and describing them. The process contains four steps:

Step 1

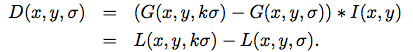

Scale-space extrema detection To find keypoints, the first thing is to detect scale-space extrema points, where are significant different from their neighbors. Koenderink (1984) and Lindeberg (1994) showed that the only possible scale-space kernel is the Gaussian function. Using the Gaussian function convolution on the image with varying scales, calculate the difference from the nearby two scales separated ny a constant factor k for finding the points whose difference is local maximum or minimum.

Two reasons support this method: (a) it is a particularly efficient function to compute (b) In addition, the difference-of-Gaussian function provides a close approximation to the scale-normalized Laplacian of Gaussian, σ2∇2G, as studied by Lindeberg (1994)

Step 2. Keypoint localization

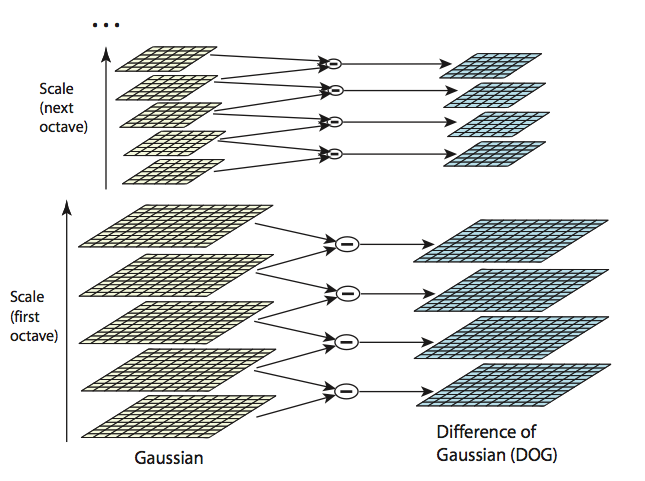

In order to detect the local maxima and minima of D(x, y, σ), each sample point is compared to its eight neighbors in the current image and nine neighbors in the scale above and below (see Figure 2)Three problem left: a. k=? b. σ=? c. How many octaves?After finding keypoints, the next problem is to accurate the keypoint localization and eliminate edge responses.

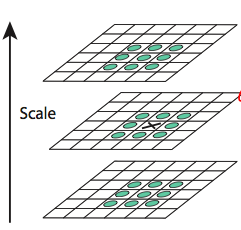

Step 3 Orientation assignment

Calculate the magnitude and the orientation of the gradient of keypoints. To make the descriptor is invariant to rotation, the orientation can be represented relative to the the dominant direction.

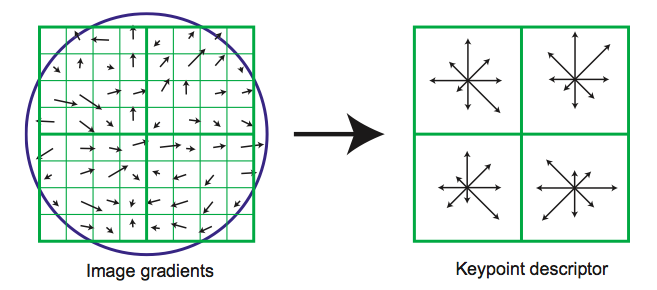

Step 4 Keypoint descriptor

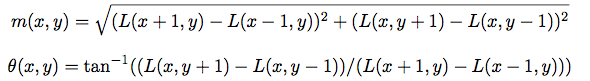

To describe the sample point, use m x m pixels within n x n blocks surrounding the point with k orientation bins. Sum up the orientations of m x m pixels to k bins weighted by magnitudes for each block. Therefore, one sample point would have n * n * k dimensions features vector to represent.The vector is normalized to unit length for the purpose that let the descriptor is invariant to the effects of illumination change, such as contrast, causing pixels value multiplied with a constant factor.Besides, a non-linear illumination changes may be caused by 3D surfaces with differing orientations or camera saturation. To cope with it, reduce the influence of large gradient magnitudes by thresholding the values in the unit feature vector to each be no larger than 0.2, and then renormalizing to unit length

Go Top